Deep Dive #1: Data-Oriented Design for a Robot Engine

Context

Copper (or Cu29) is a software engine for developing complex robots in Rust. One source of frustration we experienced in our past work building robots was the absolute misery of integration we inevitably faced at the end of each project. Some of this frustration feels unavoidable, as it is part of the natural complexity of building robots, but a large part was purely due to the unpredictability of the runtime environment.

How robots are modeled and what makes their runtime so unpredictable?

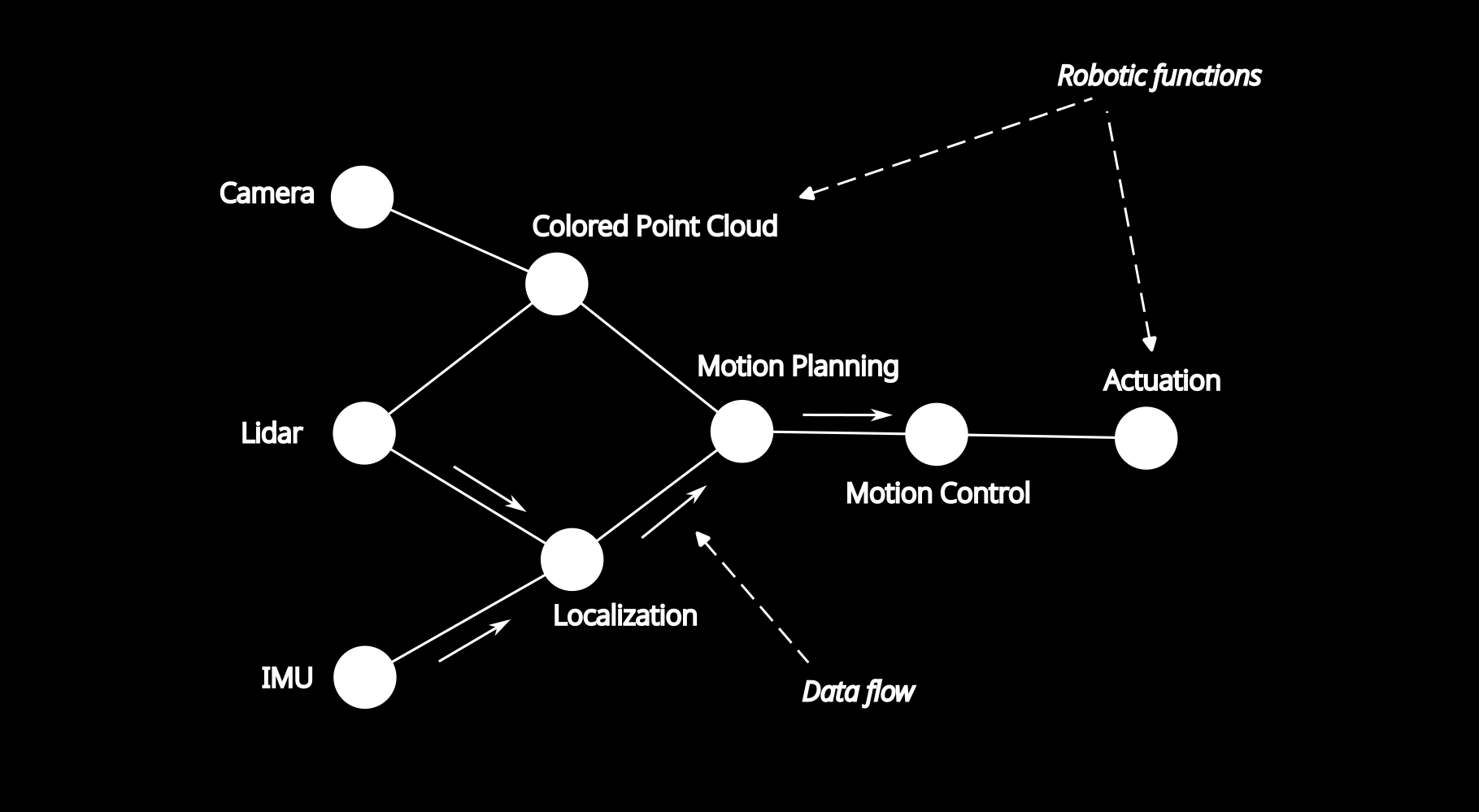

Robots need to observe the world through sensors, reason about it through algorithms, and then act accordingly (actuation).

One key attribute you need from your framework is observability: you must decompose your codebase into functions with clear inputs and outputs. It's important for developers to not only test their functions independently but also to log those real-world inputs and build datasets for their robot that accurately reflect reality.

These decomposed functions can be represented as a graph, with their inputs coming from either a sensor or another algorithm and their outputs going to another algorithm or actuators.

Since everything happens concurrently, frameworks typically use locks and leaky circular buffers to manage the varying tempos and scheduling of all concurrent functions.

This is where your system can end up looking like a pinball machine during an Apollo 13 multi-ball (for non-pinball players: this means 13 balls in play simultaneously!).

For example, in a self-driving car, you can have between 100 and 200 of these “tasks,” each mapped to threads or processes, randomly grabbing resources like vector compute and memory bandwidth, and generating context switches all over the place. The complexity of the system can quickly exceed what an engineer can manage.

How do we do this in Copper?

A short animation showing the DoD of Copper

We took inspiration from game engine developers, but with a twist.

We start with the same graph structure.

We build an “execution plan,” which involves predicting the chain of events. In a game, the “game loop” is quite similar across different games. Here’s the twist: we build this loop at compile time.

We then generate the code to create that loop. This is where Rust’s procedural macros shine, allowing us to meta-program a sort of compiler plugin. You can see how it looks here.

This approach enables us to handle the memory layout of the execution efficiently. This is data-oriented design: all accesses are linear, leveraging the latency masking features of modern computer architectures, cache optimizations, etc. This results in significantly lower latency compared to traditional middleware! For example, our version of a simple “led caterpillar” here is 100x less latent than this one built more traditionally.

Copper is Open Source and developed in the open! If you are interested by the topic, or want to contribute, ask questions … feel free to jump in our online discussion group here.